House Takes Deep Dive into Online Fakes

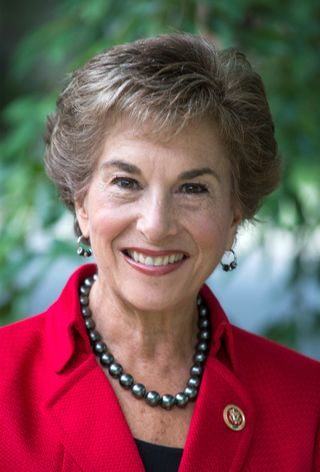

Rep. Jan Schakowsky (D-Ill.) used an informational hearing on deep fakes and other online manipulation to hammer Big Tech in general and Facebook in particular, while Republicans found more to like on the edge.

The hearing, "Americans at Risk: Manipulation and Deception in the Digital Age," in the House Consumer Protection Subcommittee that Schakowsky chair, exhibited the current political split over how to address a problem both sides concede, the rise of online deception.

Generally, Democrats who used to treat the edge as plucky upstarts to the big content and distribution companies have come around to seeing them as even bigger, now entrenched, companies in need of minding. Republicans are promoting self-regulation by The Edge as they traditionally have Big Media, though both sides of the aisle agree that there needs to be some governor, whether self-regulation or government regulation or a mix of both, on companies with net worth exceeding that of some countries' GNP and with huge stakes in arguably the most pervasive and transformative technology in human history.

Related: Slaughter Tells CES 2020 That Tech Is Not Doing Enough on Privacy

Schakowsky said that "Big Tech" had failed to respond to the "grave threat" of deep fakes, dark patterns, bots, and other technologies that are hurting the public in direct and indirect ways.

While Facebook this week to steps to try and weed out deep fakes, Schakowsky was not assuaged. She said Facebook had scrambled to announce a new policy this week that struck her as "wholly inadequate" since ti would not have weeded out the "cheap fake" video of House Speaker Nancy Pelosi (D-Calif.) that was slowed to make her appear drunk, a video that amassed millions of views but prompted no action from Facebook.

Schakowsky said that for too long, Big Tech has argued that their e-commerce and digital platforms deserve special treatment and a light regulatory touch, but she said the subcommittee is finding that consumers can be harmed as easily online as in the physical world, and in some cases the online dangers are greater. She said the subcommittee must make it clear that protections that apply in the physical world apply in the virtual world.

Broadcasting & Cable Newsletter

The smarter way to stay on top of broadcasting and cable industry. Sign up below

But Schakowsky did not confine her criticism to Big Tech. She said Congress had taken a laissez faire approach to online deception over the past decade, while the Federal Trade Commission has lacked the resources, authority and lack of will that together has left people feeling helpless.

She raised the spectre of removing the Sec. 230 liability carveout for Facebook and other edge platforms from liability for third-party content, a shield which she said many have argued has led to inadequate policing of things like piracy and extremist speech. She said Big Tech appeared "wholly unprepared to tackle the challenges we face."

She said Big Tech has failed to regulate itself, pointing to Facebook chief Mark Zuckerberg apology tour, and saying Facebook's deep fake actions this week left a lot to be desired.

Schakowsky asked witness Monika Bickert, VP of global policy management, at Facebook, why the new deep fakes policy only covers AI-manipulated video, rather than "cheap fake" human-doctored content, and why it only applies to making people appear to say things they didn't, and not edited images, like the slowed-down Pelosi video.

Bickert said that there were other ways such videos could or would be flagged, like a label identifying it as false information.

House Energy & Commerce Committee chairman Frank Pallone (D-N.J.) cited the manipulation of video to create nonconsensual porn. Bickert pointed out that would already violate its policy against nudity and porn in general.

Bickert said that Facebook was always improving its policies and enforcement to meet the moving targets of new tech, but that it welcomed collaboration with other stakeholders, including lawmakers, to "develop a consistent industry approach."

Ranking member Cathy McMorris Rodgers (R-Wash.) argued that more regulation was not the answer and that manipulating of the public was nothing new. She cited the "yellow journalism" of the newspapers of William Randolph Hearst and Joseph Pullitzer of over 100 years ago.

She said the lack of trust in those sensational headline and stories had forced those outlets to clean up their act, with the name Pulitzer, for example, ultimately meaning something quite different.

Rodgers signaled that the country was at a similar inflection point, but that the answer to rebuilding online trust was not more regulatory mandates and government action, but giving the public more information to make their own decisions, and allowing tech companies to innovate. She warned that if regulations put a crimp in such innovation, other countries, like China, could become the leader in AI.

Echoing the Trump Administration's new AI regulatory guidelines, Rodgers said that U.S. tech companies must continue to be free to innovate in AI and facial recognition and other technologies that that U.S. values of freedom and independence, not those of authoritarian regimes, undergird the technology.

Related: White House Unveils AI Regulatory Principles

House E&C ranking member and former chairman Greg Walden (R-Ore.) pointed out that the committee had already grilled Facebook founder Mark Zuckerberg and Twitter CEO Jack Dorsey last year and suggested that had prompted improvements in "questionable practices" in a "critically important ecosystem."

He said that shining a light on issues, as the hearing was doing, could often lead to swifter actions than government can get done.

He said there was proof some companies were cleaning up their platforms, and said he appreciated the work they were doing, including what he said were significant changes by Facebook after their hearing with Zuckerberg last year, including reforming privacy settings, taking down malicious entities, and investing in legitimate local news operations.

Walden said Facebook, Twitter and YouTube had been working together to tackle terrorist content. He thanked them for that as well.

Walden said that the online ecosystem was far from perfect and companies could "of course" be doing more to clean up their platforms. But he said he expected them to and they seemed to be working on that.

He also suggested that edge providers were between something of a rock and a hard place on the issue of Sec. 230 liability and how they should or shouldn't police their platforms.

"This is tough stuff," he said. " I have a degree in journalism. I'm a big advocate of the First Amendment and it can be messy business to on the one hand take down things we don't like and still stay on the right side of the First Amendment because vigorous speech, even when it is inaccurate, is still protected."

"If you go too far, then we yell at you for taking things down that we liked. And if you don't take down things we don't like then we yell at your for that, so you are kind of in a bit of a box, and yet we know Sec. 230 is an issue we need to revise and take a look at."

Contributing editor John Eggerton has been an editor and/or writer on media regulation, legislation and policy for over four decades, including covering the FCC, FTC, Congress, the major media trade associations, and the federal courts. In addition to Multichannel News and Broadcasting + Cable, his work has appeared in Radio World, TV Technology, TV Fax, This Week in Consumer Electronics, Variety and the Encyclopedia Britannica.